That's not The Rock

Testing the top image editing models across 100 recursive Dwaynes.

Image editing models are fantastic, but they generally degrade if you edit the edit of the edit's edit.

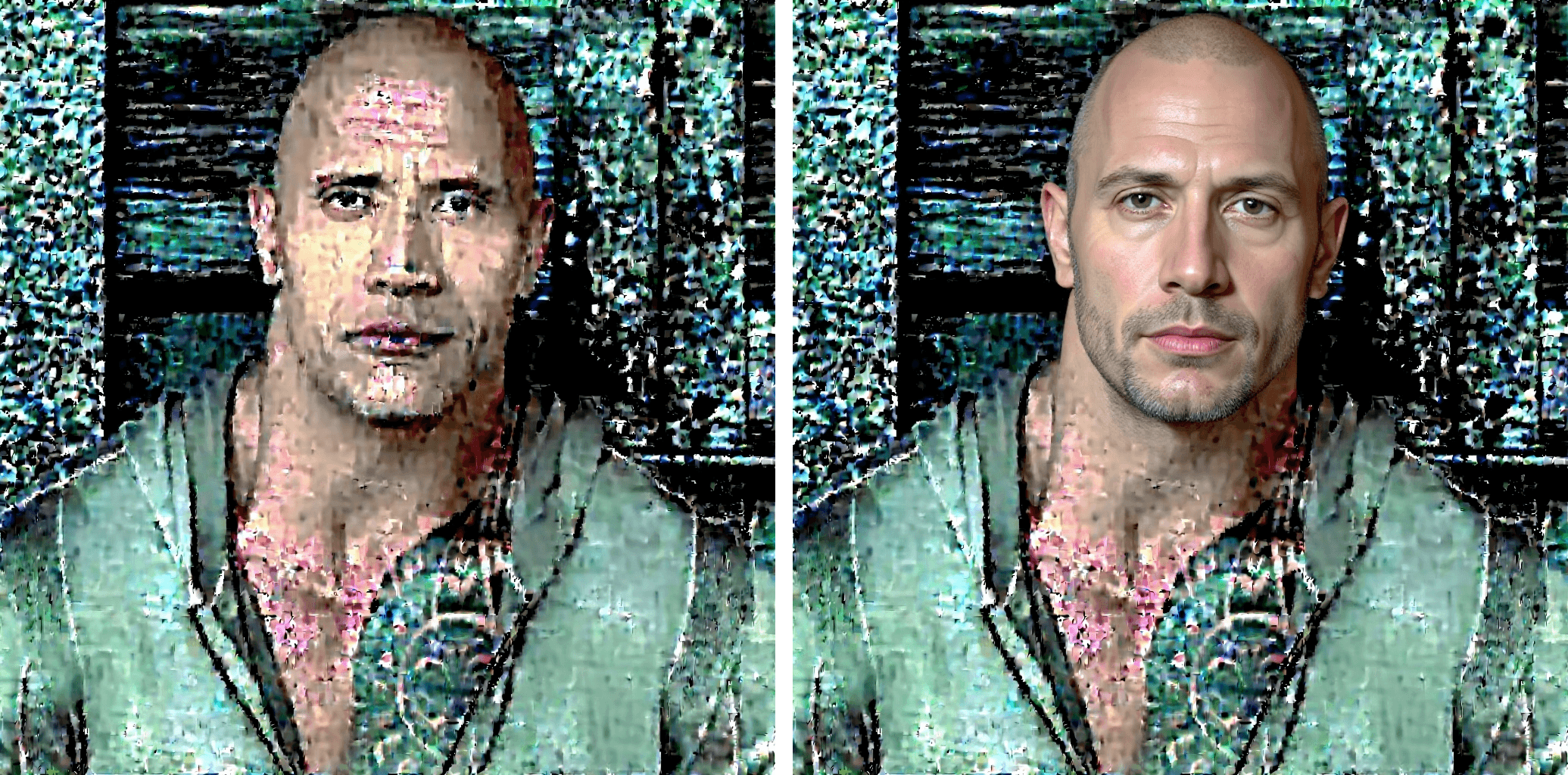

Earlier this year, u/Foreign_Builder_2238 demonstrated this by asking ChatGPT to endlessly recreate a photo of Dwayne 'The Rock' Johnson, instructing it to "Create an exact replica of this image, don't change a thing." I decided to put the the current top image editing models through that same recursive abuse (Nano Banana Pro, SeeDream 4, Qwen, and friends) to see how they unravel when looping their own outputs 100 times.

The findings were both interesting and ridiculous, with all the models tested behaving in slightly different ways. For a measure I calculated the structural similarity index for each generated image compared with the original. These are graphed for each model below. Note that using this for a measure has some flaws, minor differences in Dwayne's position will destroy the SSIM score even when the image is otherwise coherent.

The SSIM trend is interesting, but what matters more, I think, is how many recursions it takes before the subject simply isn't The Rock anymore. So, I’ve tracked the "That's Not The Rock" (TNTR) score for each model—the exact generation where Dwayne ceases to be Dwayne. Since this is subjective, I've also included a way for you to cast your own vote on when he loses his Rock-ness.

Note: Most models were only tested once, it is possible that we'd see entirely different results on retesting.

GPT-Image1

The original reddit post was 7 months prior to writing this; let's check in on how things have changed.

Images till 'That's not The Rock'

-

Be the first to vote

GPT-Image1-mini

Fortunately, the results for GPT-Image1-Mini were a lot more interesting.

Images till 'That's not The Rock'

-

Be the first to vote

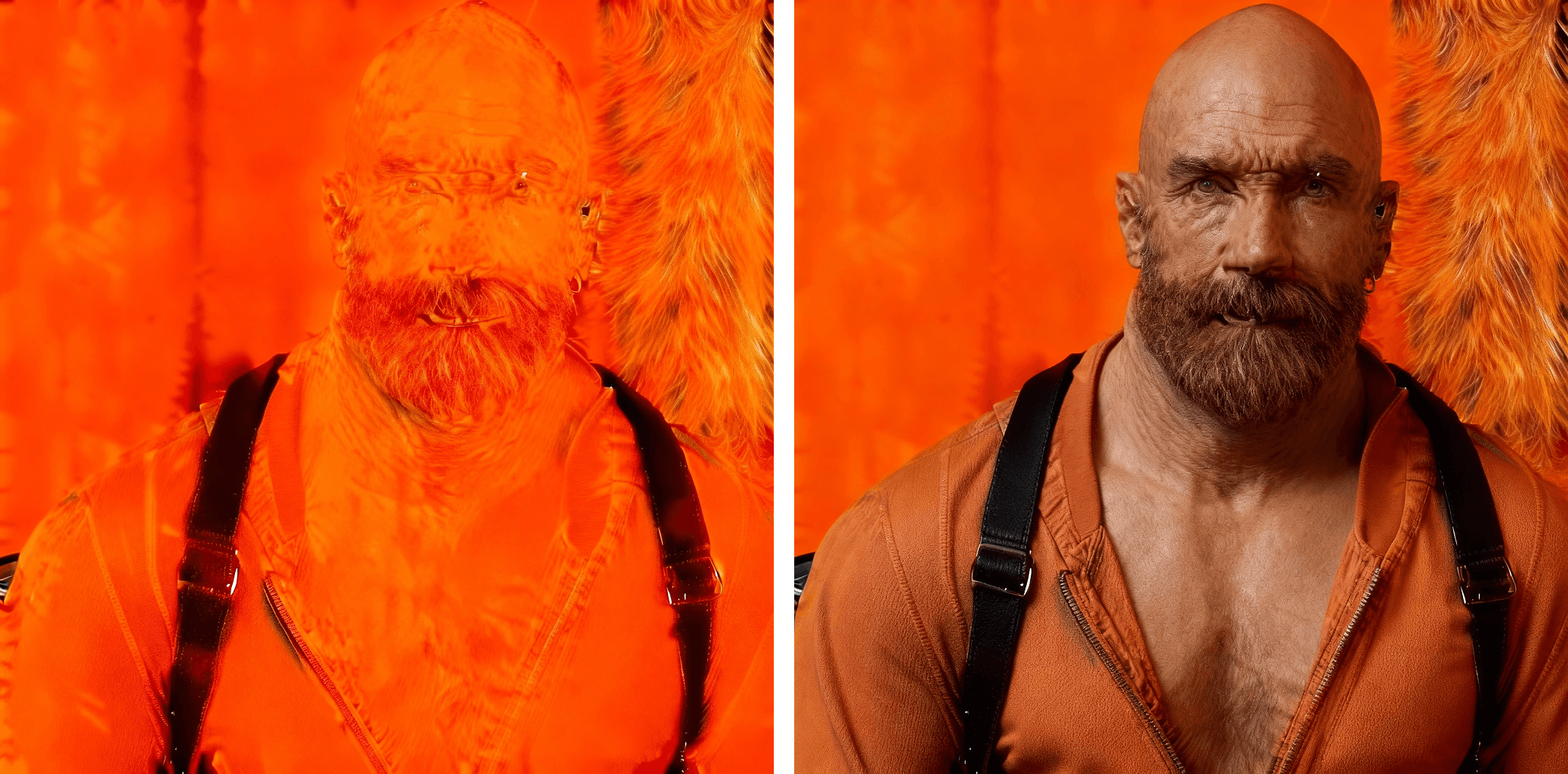

Nano Banana Pro

Currently touted as the SOTA of image editing models, how does it hold up?

Images till 'That's not The Rock'

-

Be the first to vote

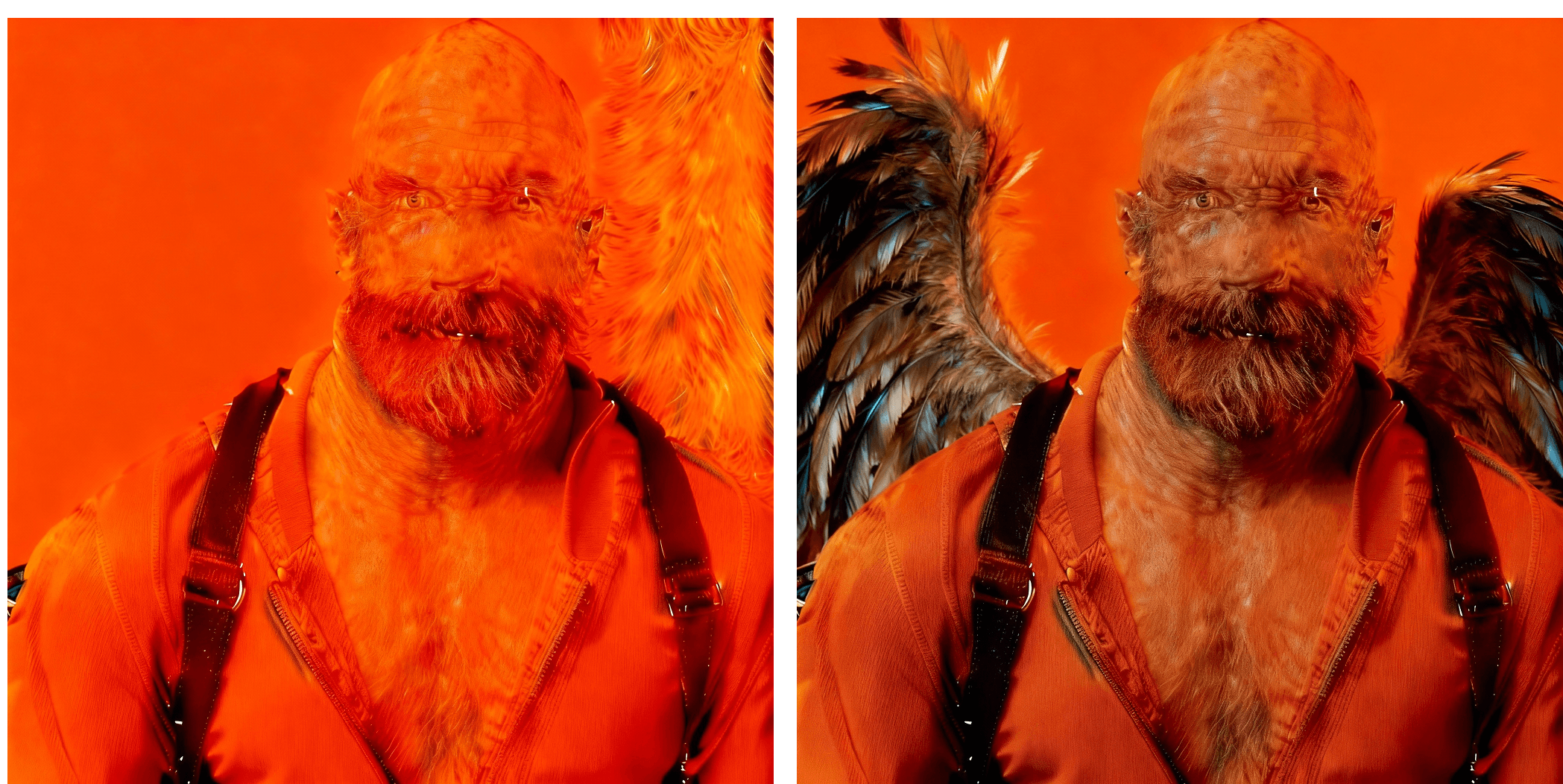

SeeDream 4

Currently the runner-up to Nano Banana Pro in the leaderboards, the pattern here trends to red, with several sudden jumps in image coherence.

Images till 'That's not The Rock'

-

Be the first to vote

Qwen Image Edit

A highly trainable model which has recently been gaining in popularity on the release of a few nice fine tunes. Note: On writing I noticed that the test here uses a previous generation of Qwen Image Edit, the more recent Qwen Image Edit 2509 is yet to be tested.

Images till 'That's not The Rock'

-

Be the first to vote

Nano Banana

The previous generation of the Nano Banana model behaves quite differently to the latest version.

Images till 'That's not The Rock'

-

Be the first to vote

Flux Kontext Pro

The model that proved the usefulness of editing models; it is over a year old now.

Images till 'That's not The Rock'

-

Be the first to vote

All Models Compared

Results breakdown

Model summary

| Model | Peak SSIM | Avg SSIM | Cost p/image | SWG | TNTR |

|---|---|---|---|---|---|

| GPT-Image1 | 0.444 | 0.017 | $0.04 | — | — |

| GPT-Image1-mini | 0.289 | 0.135 | $0.02 | ✓ | — |

| Nano Banana Pro | 0.858 | 0.278 | $0.14 | — | — |

| Nano Banana | 0.655 | 0.262 | $0.039 | — | — |

| SeeDream 4 | 0.756 | 0.265 | $0.03 | ✓ | — |

| Qwen Image Edit | 0.932 | 0.251 | $0.03 | — | — |

| Flux Kontext Pro | 0.907 | 0.314 | $0.04 | ✓ | — |

Other findings

GPT-image1-mini is consistently weird

I was most interested in GPT-image1-mini, since this was the only model that produced consistently coherent images rather than degrading to static. I ran this model five times to see how the variations introduced during the process affected the final result. The final frames were surprisingly similar, with only one run failing to produce a variation of a demonic white dude.

Click image to show progression video

Image coherence jumps

While most models trend to noise, several of them showed sudden jumps in image coherence. Seedream 4 was particularly prone to this, showing 4 distinct coherence jumps.

So what did I learn?

I never really set out to learn anything with this; this was only ever a fun experiment. It also isn't very surprising that there is loss in recursive generations with image editing models; it is expected that there will be loss in asking a generative model to reproduce an image, and that delta will compound over time. It is interesting, though, the breadth of variation in how these models degrade.

If there’s a takeaway, it's to know that none of the frontier image editing models are safe from loss in recursive image editing. So beware of editing the edit of the edit; just write a better prompt the first time.

I'd love to know more about why these models behave so differently. If you can enlighten me, send me a DM.